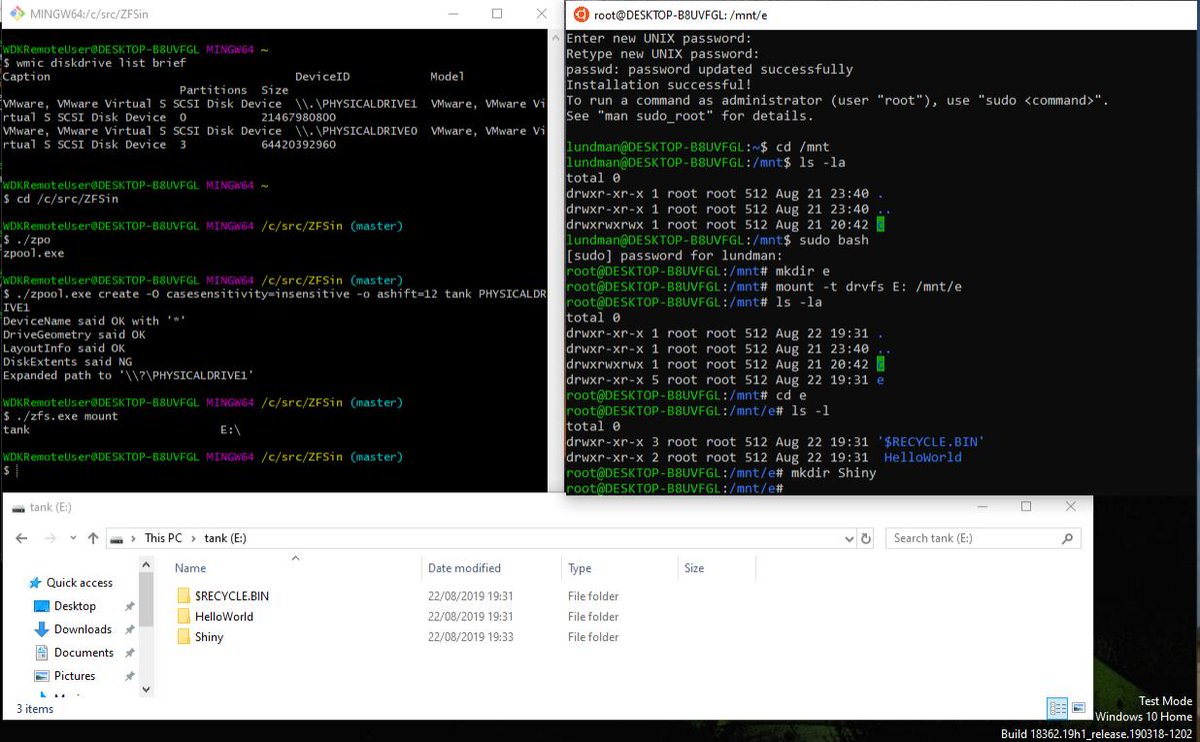

Most of the benefits of ZFS are only fully realized or are only applicable if you are letting ZFS access the raw disks. Putting the disks in RAID on a RAID card is a no-go for ZFS. The only way to use ZFS effectively in a VM is to give it raw acess to the drives, which can be done by PCIe passthrough of a HBA card, but windows 10 does not. You can attach ZFS filesystems to Windows with software alternatives that are available to Windows that enable read-write access, not just through VM solutions. Just look at zfs-win for example which uses the Dokan libraries, which also enables Windows to read Linux formatted drives like Ext2/4, by hooking to ExFAT.sys in Windows. – user352590 Jun 2 '17 at 18:30.

Storage fundamentals

View more storiesOf course, this is an unofficial port and Microsoft has not expressed any interest in bringing ZFS to Windows, especially given their current focus on ReFS. This developer has much of Open ZFS running within Windows as a native kernel module but among the functionality currently missing is ZVOL support, the ability to compile ZFS on top of ZFS.

- ZFS doesn't allow two systems to use it at the same time (unless it is shared through NFS or similar) and booting Windows from ZFS would be another challenge (I bet much more difficult one). Currently there's no good FS that can work together with Windows and Linux/Unix.

- Windows Storage Spaces is the successor to Drive Extender, and adds a lot of useful functionality to it. Finally, the comparison. Let’s compare the pros and cons of ZFS and Windows Storage Spaces across a few different categories that would be important to an average user. One of the major pros to using ZFS on Linux is that it is.

This has been a long while in the making—it's test results time. To truly understand the fundamentals of computer storage, it's important to explore the impact of various conventional RAID (Redundant Array of Inexpensive Disks) topologies on performance. It's also important to understand what ZFS is and how it works. But at some point, people (particularly computer enthusiasts on the Internet) want numbers.

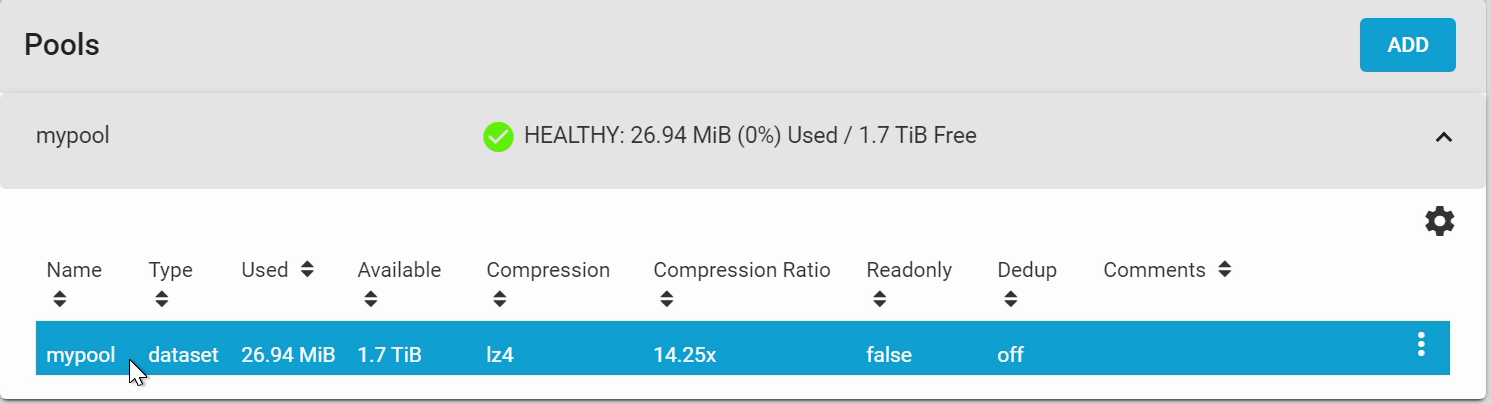

First, a quick note: This testing, naturally, builds on those fundamentals. We're going to draw heavily on lessons learned as we explore ZFS topologies here. If you aren't yet entirely solid on the difference between pools and vdevs or what ashift and recordsize mean, we strongly recommend you revisit those explainers before diving into testing and results.

And although everybody loves to see raw numbers, we urge an additional focus on how these figures relate to one another. All of our charts relate the performance of ZFS pool topologies at sizes from two to eight disks to the performance of a single disk. If you change the model of disk, your raw numbers will change accordingly—but for the most part, their relation to a single disk's performance will not.

Equipment as tested

We used the eight empty bays in our Summer 2019 Storage Hot Rod for this test. It's got oodles of RAM and more than enough CPU horsepower to chew through these storage tests without breaking a sweat.| Specs at a glance: Summer 2019 Storage Hot Rod, as tested | |

|---|---|

| OS | Ubuntu 18.04.4 LTS |

| CPU | AMD Ryzen 7 2700X—$250 on Amazon |

| RAM | 64GB ECC DDR4 UDIMM kit—$459 at Amazon |

| Storage Adapter | LSI-9300-8i 8-port Host Bus Adapter—$148 at Amazon |

| Storage | 8x 12TB Seagate Ironwolf—$320 ea at Amazon |

| Motherboard | Asrock Rack X470D4U—$260 at Amazon |

| PSU | EVGA 850GQ Semi Modular PSU—$140 at Adorama |

| Chassis | Rosewill RSV-L4112—Typically $260, currently unavailable due to CV19 |

The Storage Hot Rod's also got a dedicated LSI-9300-8i Host Bus Adapter (HBA) which isn't used for anything but the disks under test. The first four bays of the chassis have our own backup data on them—but they were idle during all tests here and are attached to the motherboard's SATA controller, entirely isolated from our test arrays.

AdvertisementHow we tested

As always, we used fio to perform all of our storage tests. We ran them locally on the Hot Rod, and we used three basic random-access test types: read, write, and sync write. Each of the tests was run with both 4K and 1M blocksizes, and I ran the tests both with a single process and iodepth=1 as well as with eight processes with iodepth=8.For all tests, we're using ZFS on Linux 0.7.5, as found in main repositories for Ubuntu 18.04 LTS. It's worth noting that ZFS on Linux 0.7.5 is two years old now—there are features and performance improvements in newer versions of OpenZFS that weren't available in 0.7.5.

We tested with 0.7.5 anyway—much to the annoyance of at least one very senior OpenZFS developer—because when we ran the tests, 18.04 was the most current Ubuntu LTS and one of the most current stable distributions in general. In the next article in this series—on ZFS tuning and optimization—we'll update to the brand-new Ubuntu 20.04 LTS and a much newer ZFS on Linux 0.8.3.

Initial setup: ZFS vs mdraid/ext4

When we tested mdadm and ext4, we didn't really use the entire disk—we created a 1TiB partition at the head of each disk and used those 1TiB partitions. We also had to invoke arcane arguments—mkfs.ext4 -E lazy_itable_init=0,lazy_journal_init=0—to avoid ext4's preallocation from contaminating our results.

Using these relatively small partitions instead of the entire disks was a practical necessity, since ext4 needs to grovel over the entire created filesystem and disperse preallocated metadata blocks throughout. If we had used the full disks, the usable space on the eight-disk RAID6 topology would have been roughly 65TiB—and it would have taken several hours to format, with similar agonizing waits for every topology tested.

ZFS, happily, doesn't need or want to preallocate metadata blocks—it creates them on the fly as they become necessary instead. So we fed ZFS each 12TB Ironwolf disk in its entirety, and we didn't need to wait through lengthy formatting procedures—each topology, even the largest, was ready for use a second or two after creation, with no special arguments needed.

AdvertisementZFS vs conventional RAID

A conventional RAID array is a simple abstraction layer that sits between a filesystem and a set of disks. It presents the entire array as a virtual 'disk' device that, from the filesystem's perspective, is indistinguishable from an actual, individual disk—even if it's significantly larger than the largest single disk might be.

ZFS is an entirely different animal, and it encompasses functions that normally might occupy three separate layers in a traditional Unixlike system. It's a logical volume manager, a RAID system, and a filesystem all wrapped into one. Merging traditional layers like this has caused many a senior admin to grind their teeth in outrage, but there are very good reasons for it.

There is an absolute ton of features ZFS offers, and users unfamiliar with them are highly encouraged to take a look at our 2014 coverage of next-generation filesystems for a basic overview as well as our recent ZFS 101 article for a much more comprehensive explanation.

Megabytes vs Mebibytes

As in the last article, our units of performance measurement here are kibibytes (KiB) and mebibytes (MiB). A kibibyte is 1,024 bytes, a mebibyte is 1,024 kibibytes, and so forth—in contrast to a kilobyte, which is 1,000 bytes, and a megabyte, which is 1,000 kilobytes.

Kibibytes and their big siblings have always been the standard units for computer storage. Prior to the 1990s, computer professionals simply referred to them as K and M—and used the inaccurate metric prefixes when they spelled them out. But any time your operating system refers to GB, MB, or KB—whether in terms of free space, network speed, or amounts of RAM—it's really referring to GiB, MiB, and KiB.

Storage vendors, unfortunately, eventually seized upon the difference between the metrics as a way to more cheaply produce 'gigabyte' drives and then 'terabyte' drives—so a 500GB SSD is really only 465 GiB, and 12TB hard drives like the ones we're testing today are really only 10.9TiB each.

This section describes using the Zettabyte File System (ZFS) to store virtual disk backends exported to guest domains. ZFS provides a convenient and powerful solution to create and manage virtual disk backends. ZFS enables:

Storing disk images in ZFS volumes or ZFS files

Using snapshots to backup disk images

Using clones to duplicate disk images and provision additional domains

Refer to the Solaris ZFS Administration Guide for more information about using the ZFS.

In the following descriptions and examples, the primary domain is also the service domain where disk images are stored.

Configuring a ZFS Pool in a Service Domain

To store the disk images, first create a ZFS storage pool in the service domain. For example, this command creates the ZFS storage pool ldmpool containing the disk c1t50d0 in the primary domain.

Storing Disk Images With ZFS

The following command creates a disk image for guest domain ldg1. A ZFS file system for this guest domain is created, and all disk images of this guest domain will be stored on that file system.

Disk images can be stored on ZFS volumes or ZFS files. Creating a ZFS volume, whatever its size, is quick using the zfs create -V command. On the other hand, ZFS files have to be created using the mkfile command. The command can take some time to complete, especially if the file to create is quite large, which is often the case when creating a disk image.

Both ZFS volumes and ZFS files can take advantage of ZFS features such as snapshot and clone, but a ZFS volume is a pseudo device while a ZFS file is a regular file.

If the disk image is to be used as a virtual disk onto which the Solaris OS is to be installed, then it should be large enough to contain:

Installed software – about 6 gigabytes

Swap partition – about 1 gigabyte

Extra space to store system data – at least 1 gigabyte

Therefore, the size of a disk image to install the entire Solaris OS should be at least 8 gigabytes.

Examples of Storing Disk Images With ZFS

Create a 10-gigabyte image on a ZFS volume or file.

Export the ZFS volume or file as a virtual disk. The syntax to export a ZFS volume or file is the same, but the path to the backend is different.

Assign the exported ZFS volume or file to a guest domain.

The following examples:

When the guest domain is started, the ZFS volume or file appears as a virtual disk on which the Solaris OS can be installed.

Create a Disk Image Using a ZFS Volume

For example, create a 10-gigabyte disk image on a ZFS volume.

Create a Disk Image Using a ZFS File

For example, create a 10-gigabyte disk image on a ZFS volume.

Export the ZFS Volume

Export the ZFS volume as a virtual disk.

Export the ZFS File

Export the ZFS file as a virtual disk.

Assign the ZFS Volume or File to a Guest Domain

Assign the ZFS volume or file to a guest domain; in this example, ldg1.

Creating a Snapshot of a Disk Image

When your disk image is stored on a ZFS volume or on a ZFS file, you can create snapshots of this disk image by using the ZFS snapshot command.

Before you create a snapshot of the disk image, ensure that the disk is not currently in use in the guest domain to ensure that data currently stored on the disk image are coherent. There are several ways to ensure that a disk is not in use in a guest domain. You can either:

Stop and unbind the guest domain. This is the safest solution, and this is the only solution available if you want to create a snapshot of a disk image used as the boot disk of a guest domain.

Alternatively, you can unmount any slices of the disk you want to snapshot used in the guest domain, and ensure that no slice is in use the guest domain.

In this example, because of the ZFS layout, the command to create a snapshot of the disk image is the same whether the disk image is stored on a ZFS volume or on a ZFS file.

Create a Snapshot of a Disk Image

Read Zfs Windows

Create a snapshot of the disk image that was created for the ldg1 domain, for example.

Using Clone to Provision a New Domain

Once you have created a snapshot of a disk image, you can duplicate this disk image by using the ZFS clone command. Then the cloned image can be assigned to another domain. Cloning a boot disk image quickly creates a boot disk for a new guest domain without having to perform the entire Solaris OS installation process.

For example, if the disk0 created was the boot disk of domain ldg1, do the following to clone that disk to create a boot disk for domain ldg2.

Then ldompool/ldg2/disk0 can be exported as a virtual disk and assigned to the new ldg2 domain. The domain ldg2 can directly boot from that virtual disk without having to go through the OS installation process.

Cloning a Boot Disk Image

When a boot disk image is cloned, the new image is exactly the same as the original boot disk, and it contains any information that has been stored on the boot disk before the image was cloned, such as the host name, the IP address, the mounted file system table, or any system configuration or tuning.

Because the mounted file system table is the same on the original boot disk image and on the cloned disk image, the cloned disk image has to be assigned to the new domain in the same order as it was on the original domain. For example, if the boot disk image was assigned as the first disk of the original domain, then the cloned disk image has to be assigned as the first disk of the new domain. Otherwise, the new domain is unable to boot.

If the original domain was configured with a static IP address, then a new domain using the cloned image starts with the same IP address. In that case, you can change the network configuration of the new domain by using the sys-unconfig(1M) command. To avoid this problem you can also create a snapshot of a disk image of an unconfigured system.

If the original domain was configured with the Dynamic Host Configuration Protocol (DHCP), then a new domain using the cloned image also uses DHCP. In that case, you do not need to change the network configuration of the new domain because it automatically receives an IP address and its network configuration as it boots.

Note –Windows Zfs Driver

The host ID of a domain is not stored on the boot disk, but it is assigned by the Logical Domains Manager when you create a domain. Therefore, when you clone a disk image, the new domain does not keep the host ID of the original domain.

Windows Zfs Support

Create a Snapshot of a Disk Image of an Unconfigured System

Freebsd Zfs

Bind and start the original domain.

Execute the sys-unconfig command.

After the sys-unconfig command completes, the domain halts.

Stop and unbind the domain; do not reboot it.

Take a snapshot of the domain boot disk image.

For example:

At this point you have the snapshot of the boot disk image of an unconfigured system.

Clone this image to create a new domain which, when first booted, asks for the configuration of the system.